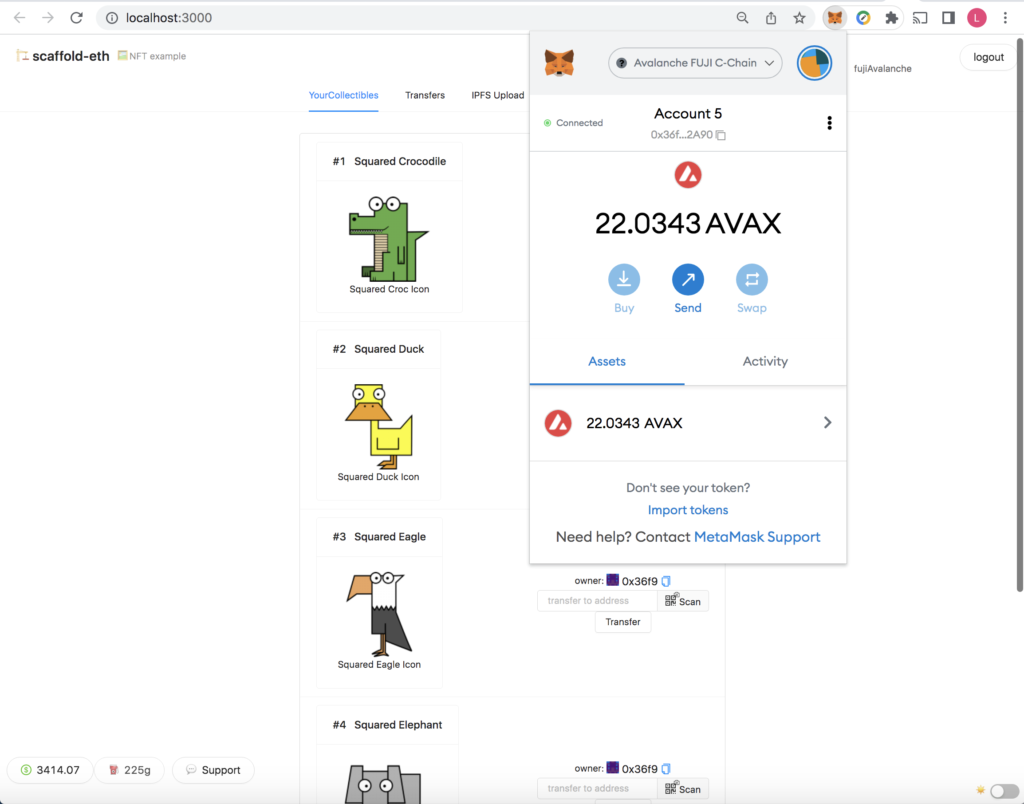

Having immersed in coding JavaScript exclusively using Node.js and React over the past couple of months, I’ve come to appreciate the versatility and robustness the “combo” has to offer. I’ve always liked the minimalist design of Node.js, and would always consider it a top candidate whenever building an app/API server is needed. Besides ordinary app servers, Node has also been picked on a few occasions to serve as servers for decentralized applications (dApps) that involve smart contract deployments to public blockchains. In fact, Node and React are also a popular tech stack for dApp frameworks such as Scaffold-ETH.

React & React Redux

React is relatively new to me, though it’s rather easy to pick up the basics from React‘s official site. And many tutorials out there showcase how to build applications using React along with the feature-rich toolset within the React ecosystem. For instance, this tutorial code repo offers helpful insight for developing a React application with basic CRUD.

React can be complemented with Redux that allows a central store for state update in the UI components. Contrary to the local state maintained within an React component (oftentimes used for handling interactive state changes to input form elements), the central store can be shared across multiple components for state update. That’s a key feature useful for the R&D project at hand.

Rather than just providing a plain global state repository for direct access, the React store is by design “decoupled” from the components. React Redux allows custom programmatic actions to be structured by user-defined action types. To dispatch an action, a component would invoke a dispatch() function which is the only mechanism that triggers a state change.

React actions & reducers

In general, a React action which is oftentimes dispatched in response to an UI event (e.g. a click on a button) mainly does two things:

- It carries out the defined action which is oftentimes an asynchronous function that invokes a user-defined React

servicewhich, for instance, might be a client HTTP call to aNode.jsserver. - It connects with the

Reduxstore and gets funneled into areductionprocess. Thereductionis performed thru a user-definedreducerwhich is typically a state aggregation of the correspondingaction type.

An action might look something like below:

const myAction = () => async (dispatch) => {

try {

const res = await myService.someFunction();

dispatch({

type: someActionType,

payload: res.data,

});

} catch (err) {

...

}

};

whereas a reducer generally has the following function signature:

const myReducer = (currState = prevState, action) => {

const { type, payload } = action;

switch (type) {

case someActionType:

return someFormOfPayload;

case anotherActionType:

return anotherFormOfPayload;

...

default:

return currState;

}

};

Example of a React action

${react-project-root}/src/actions/user.js

import {

CREATE_USER,

RETRIEVE_USERS,

UPDATE_USER,

DELETE_USER

} from "./types";

import UserDataService from "../services/user.service";

export const createUser = (username, password, email, firstName, lastName) => async (dispatch) => {

try {

const res = await UserDataService.create({ username, password, email, firstName, lastName });

dispatch({

type: CREATE_USER,

payload: res.data,

});

return Promise.resolve(res.data);

} catch (err) {

return Promise.reject(err);

}

};

export const findUsersByEmail = (email) => async (dispatch) => {

try {

const res = await UserDataService.findByEmail(email);

dispatch({

type: RETRIEVE_USERS,

payload: res.data,

});

} catch (err) {

console.error(err);

}

};

export const updateUser = (id, data) => async (dispatch) => {

try {

const res = await UserDataService.update(id, data);

dispatch({

type: UPDATE_USER,

payload: data,

});

return Promise.resolve(res.data);

} catch (err) {

return Promise.reject(err);

}

};

export const deleteUser = (id) => async (dispatch) => {

try {

await UserDataService.delete(id);

dispatch({

type: DELETE_USER,

payload: { id },

});

} catch (err) {

console.error(err);

}

};

Example of a React reducer

${react-project-root}/src/reducers/users.js

import {

CREATE_USER,

RETRIEVE_USERS,

UPDATE_USER

DELETE_USER,

} from "../actions/types";

const initState = [];

function userReducer(users = initState, action) {

const { type, payload } = action;

switch (type) {

case CREATE_USER:

return [...users, payload];

case RETRIEVE_USERS:

return payload;

case UPDATE_USER:

return users.map((user) => {

if (user.id === payload.id) {

return {

...user,

...payload,

};

} else {

return user;

}

});

case DELETE_USER:

return users.filter(({ id }) => id !== payload.id);

default:

return users;

}

};

export default userReducer;

React components

Using React Hooks which are built-in functions, the UI-centric React components harness powerful features related to handling states, programmatic properties, parametric attributes, and more.

To dispatch an action, the useDispatch hook for React Redux can be used that might look like below:

import { useDispatch, useSelector } from "react-redux";

...

const dispatch = useDispatch();

...

dispatch(myAction(someRecord.id, someRecord)) // Corresponding service returns a promise

.then((response) => {

setMessage("myAction successful!");

...

})

.catch(err => {

...

});

...

And to retrieve the state of a certain item from the Redux store, the userSelector hook allow one to use a selector function to extract the target item as follows:

const myRecords = useSelector(state => state.myRecords); // Reducer myRecords.js

Example of a React component

${react-project-root}/src/components/UserList.js

import React, { useState, useEffect } from "react";

import { useDispatch, useSelector } from "react-redux";

import { Link } from "react-router-dom";

import { retrieveUsers, findUsersByEmail } from "../actions/user";

const UserList = () => {

const dispatch = useDispatch();

const users = useSelector(state => state.users);

const [currentUser, setCurrentUser] = useState(null);

const [currentIndex, setCurrentIndex] = useState(-1);

const [searchEmail, setSearchEmail] = useState("");

useEffect(() => {

dispatch(retrieveUsers());

}, [dispatch]);

const onChangeSearchEmail = e => {

const searchEmail = e.target.value;

setSearchEmail(searchEmail);

};

const refreshData = () => {

setCurrentUser(null);

setCurrentIndex(-1);

};

const setActiveUser = (user, index) => {

setCurrentUser(user);

setCurrentIndex(index);

};

const findByEmail = () => {

refreshData();

dispatch(findUsersByEmail(searchEmail));

};

return (

<div className="list row">

<div className="col-md-9">

<div className="input-group mb-3">

<input

type="text"

className="form-control"

id="searchByEmail"

placeholder="Search by email"

value={searchEmail}

onChange={onChangeSearchEmail}

/>

<div className="input-group-append">

<button

className="btn btn-warning m-2"

type="button"

onClick={findByEmail}

>

Search

</button>

</div>

</div>

</div>

<div className="col-md-5">

<h4>User List</h4>

<ul className="list-group">

{users &&

users.map((user, index) => (

<li

className={

"list-group-item " + (index === currentIndex ? "active" : "")

}

onClick={() => setActiveUser(user, index)}

key={index}

>

<div className="row">

<div className="col-md-2">{user.id}</div>

<div className="col-md-10">{user.email}</div>

</div>

</li>

))}

</ul>

<Link to="/add-user"

className="btn btn-warning mt-2 mb-2"

>

Create a user

</Link>

</div>

<div className="col-md-7">

{currentUser ? (

<div>

<h4>User</h4>

<div className="row">

<div className="col-md-3 fw-bold">ID:</div>

<div className="col-md-9">{currentUser.id}</div>

</div>

<div className="row">

<div className="col-md-3 fw-bold">Username:</div>

<div className="col-md-9">{currentUser.username}</div>

</div>

<div className="row">

<div className="col-md-3 fw-bold">Email:</div>

<div className="col-md-9">{currentUser.email}</div>

</div>

<div className="row">

<div className="col-md-3 fw-bold">First Name:</div>

<div className="col-md-9">{currentUser.firstName}</div>

</div>

<div className="row">

<div className="col-md-3 fw-bold">Last Name:</div>

<div className="col-md-9">{currentUser.lastName}</div>

</div>

<Link

to={"/user/" + currentUser.id}

className="btn btn-warning mt-2 mb-2"

>

Edit

</Link>

</div>

) : (

<div>

<br />

<p>Please click on a user for details ...</p>

</div>

)}

</div>

</div>

);

};

export default UserList;

It should be noted that, despite having been stripped down for simplicity, the above sample code might still have included a little bit too much details for React beginners. For now, the primary goal is to highlight how an action is powered by function dispatch() in accordance with a certain UI event to interactively update state in the Redux central store thru a corresponding reducer function.

In the next blog post, we’ll dive a little deeper into React components and how they have evolved from the class-based OOP (object oriented programming) to the FP (functional programming) style with React Hooks.