By using Android’s TextClock and Location/Geocoder on top of its CameraX API as shown in the previous blog post, we now have a mobile app equipped with the functionality of overlaying live videos with time and locale info. Such functionality is useful especially if the “when” and “where” are supposed to be integral content of the recordings.

Use cases for ID tagged items & IoT

Below are a few use cases when ID tag (e.g. QR code, RFID) or IoT sensor (e.g. ZigBee, LwM2M) technologies are involved.

- Inventory records of tagged products – By video recording detected tag IDs of tagged products along with time and locale as integral content of the recordings, traceable records will be readily available for inventory auditing.

- Provenance of tagged collectibles – Similarly, live videos capturing the time and locale of collectibles/memorabilia (e.g. original artworks) tagged with unique IDs from the owners in an event (e.g. original artists at an exhibition) can be used as a critical part of the provenance of their authenticity. Potential buyers could look up from a trusted tag data source (e.g. a central database or decentralized blockchain) for tag ID verifications.

- IoT sensors scanning & recording – By bundling the recorder app with protocol-specific scanner toolkit, IoT sensor devices can be scanned and recorded for testing/auditing purpose.

Recall that the time-locale overlaid recording feature being built is through the recording of the the camera screen on which the time and locale info are displayed. To maximize flexibility, rather than using a 3rd-party screen recording app, we’re going to roll out our own screen recorder.

HBRecorder

HBRecorder is a popular Android screen recording library. Minimal permissions needed to be included in AndroidManifest.xml for the recorder library are:

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /> <uses-permission android:name="android.permission.WRITE_INTERNAL_STORAGE" /> <uses-permission android:name="android.permission.RECORD_AUDIO" /> <uses-permission android:name="android.permission.FOREGROUND_SERVICE" />

Let’s add a start/stop button at the bottom of the UI layout.

content_main.xml:

<?xml version="1.0" encoding="utf-8"?>

<androidx.coordinatorlayout.widget.CoordinatorLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

app:layout_behavior="@string/appbar_scrolling_view_behavior"

tools:context=".MainActivity"

tools:showIn="@layout/activity_main">

<RelativeLayout

android:layout_width="match_parent"

android:layout_height="match_parent">

...

<RelativeLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true"

android:background="@color/colorPrimary">

<Button

android:id="@+id/button_start"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="@string/start_recording"

android:textColor="@android:color/white"

android:background="@drawable/ripple_effect"

tools:text="@string/start_recording"/>

</RelativeLayout>

</RelativeLayout>

</androidx.coordinatorlayout.widget.CoordinatorLayout>

Next, we make the main class MainActivity implement HBRecorderListener for custom application logic upon occurrence of events including:

- HBRecorderOnStart()

- HBRecorderOnComplete()

- HBRecorderOnError()

- HBRecorderOnPause()

- HBRecorderOnResume()

- onRequestPermissionsResult()

- onActivityResult()

For basic screen recording purposes using the library, source code for MainActivity.java highlighted with HBRecorder implementation is included at the end of this post.

Full source code for the Android time-locale video recorder with simulated ID tag scanning/recording is available at this GitHub repo.

Recording detected barcodes / QR codes

Now that we have a time-locale overlaid screen recording app in place, we’re ready to use it for specific use cases that involve ID tags. If barcode or QR code is being used for ID tagging, one could use Google’s ML Kit API (in particular, MlKitAnalyzer and BarcodeScanner) to visually pattern match barcode/QR code on Android’s camera PreviewView.

The following sample code from Android developers website uses LifecycleCameraController to create an image analyzer with MlKitAnalyzer to set up a BarcodeScanner for detecting QR codes:

BarcodeScannerOptions options = new BarcodeScannerOptions.Builder()

.setBarcodeFormats(Barcode.FORMAT_QR_CODE)

.build();

BarcodeScanner barcodeScanner = BarcodeScanning.getClient(options);

cameraController.setImageAnalysisAnalyzer(executor,

new MlKitAnalyzer(List.of(barcodeScanner), COORDINATE_SYSTEM_VIEW_REFERENCED,

executor, result -> {

});

Source code for the CameraX-MLKit can be found in this Android camera-samples repo. The official sample code is in Kotlin, though some Java implementations are available out there.

Recording RFID scans

To live record detected RFID tags, it’d involve a little more effort. A scanner capable of scanning RFID tags and transmitting the scanned data to the Android phone (thru Bluetooth, wired USB, etc) will be needed. One of the leading RFID scanner manufacturers is Zebra which also offers an Android SDK for their products.

The SDK setup would involve downloading the latest RFID API library, configuring the RFID API library via Gradle, creating a new import module (:RFIDAPI3Library) and adding the library as a project-level dependency. To use the RFID scanning features, import the library from within the main app and make class MainActivity implement RFIDHandler‘s RFID interface to customize lifecycle routines (e.g. onPause, onResume) and reader operational methods to handle things like reader trigger being pressed, processing detected tag IDs, etc.

The dependencies in app/build.gradle will include the RFID module.

dependencies {

...

implementation project(':RFIDAPI3Library')

}

MainActivity.java will look something like below.

public class MainActivity extends AppCompatActivity implements RFIDHandler.ResponseHandlerInterface {

...

RFIDHandler rfidHandler;

@Override

protected void onCreate(Bundle savedInstanceState) {

...

rfidHandler = new RFIDHandler();

...

}

...

@Override

public void handleTagdata(TagData[] tagData) {

final StringBuilder sb = new StringBuilder();

for (int index = 0; index < tagData.length; index++) {

sb.append(tagData[index].getTagID() + "\n");

}

runOnUiThread(new Runnable() {

@Override

public void run() {

textrfid.append(sb.toString());

}

});

}

@Override

public void handleTriggerPress(boolean pressed) {

if (pressed) {

runOnUiThread(new Runnable() {

@Override

public void run() {

textrfid.setText("");

}

});

rfidHandler.performInventory();

} else

rfidHandler.stopInventory();

}

}

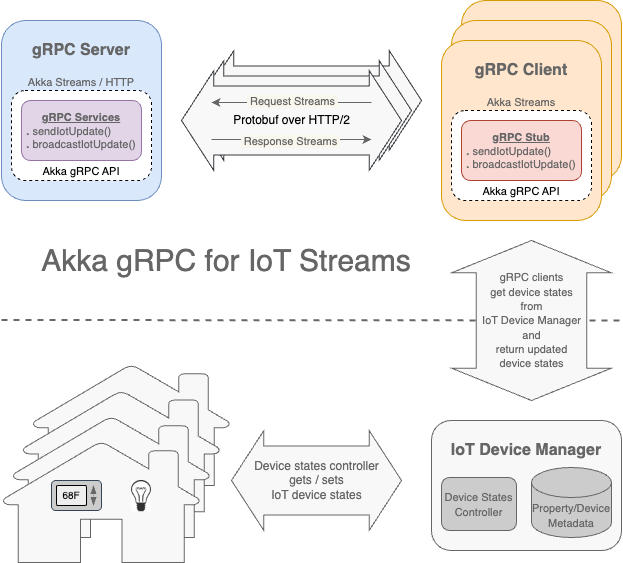

IoT sensors scanning/recording

In IoT, commonly used protocols include LwM2M (Lightweight M2M), MQTT (MQ Telemetry Transport), ZigBee (IEEE 802.15.4 compliant WPAN protocol), Z-wave, etc. With an emphasis in being lightweight and interoperability, LwM2M has gained a lot of momentum in recent years. For ZigBee, since the protocol spans across from the application to physical layers, a ZigBee hub which connects to the Android device via Bluetooth/WiFi might be needed.

Specific expertise in the IoT protocol of choice is required for the video recorder app implementation. That’s beyond of scope of what would like to focus on in this blog post. For those who want to delve deeper into the details, some of the open-source libraries which might be of interest are Leshan Java library for LwM2M (server & client Java impl) and ZigBee API for Java.

Final thoughts

Obviously, there are countless other use cases the time-locale video recorder can be used for recording, reconciling and proofing valuable items or products via QR code, RFID or IoT communication protocols like what have been described. What’s remarkable about such a customizable video recorder is that despite its simplicity, the app can be readily integrated with any suitable mobile SDK or library module for a given ID tag / IoT sensor reader to provide a low-cost solution on a consumer-grade mobile device usable virtually any time and anywhere.

Appendix

MainActivity.java highlighted with HBRecorder implementation:

import com.hbisoft.hbrecorder.HBRecorder;

import com.hbisoft.hbrecorder.HBRecorderListener;

public class MainActivity extends AppCompatActivity implements HBRecorderListener {

...

private HBRecorder hbRecorder;

private static final int SCREEN_RECORD_REQUEST_CODE = 777;

private static final int PERMISSION_REQ_ID_RECORD_AUDIO = 22;

private static final int PERMISSION_REQ_POST_NOTIFICATIONS = 33;

private static final int PERMISSION_REQ_ID_WRITE_EXTERNAL_STORAGE = PERMISSION_REQ_ID_RECORD_AUDIO + 1;

private boolean hasPermissions = false;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

...

initViews();

setOnClickListeners();

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

hbRecorder = new HBRecorder(this, this);

if (hbRecorder.isBusyRecording()) {

startbtn.setText(R.string.stop_recording);

}

}

}

...

private void createFolder() {

File f1 = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_MOVIES), "HBRecorder");

if (!f1.exists()) {

if (f1.mkdirs()) {

Log.i("Folder ", "created");

}

}

}

private void initViews() {

startbtn = findViewById(R.id.button_start);

}

private void setOnClickListeners() {

startbtn.setOnClickListener(v -> {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.TIRAMISU) {

if (checkSelfPermission(Manifest.permission.POST_NOTIFICATIONS, PERMISSION_REQ_POST_NOTIFICATIONS) && checkSelfPermission(Manifest.permission.RECORD_AUDIO, PERMISSION_REQ_ID_RECORD_AUDIO)) {

hasPermissions = true;

}

}

else if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.Q) {

if (checkSelfPermission(Manifest.permission.RECORD_AUDIO, PERMISSION_REQ_ID_RECORD_AUDIO)) {

hasPermissions = true;

}

} else {

if (checkSelfPermission(Manifest.permission.RECORD_AUDIO, PERMISSION_REQ_ID_RECORD_AUDIO) && checkSelfPermission(Manifest.permission.WRITE_EXTERNAL_STORAGE, PERMISSION_REQ_ID_WRITE_EXTERNAL_STORAGE)) {

hasPermissions = true;

}

}

if (hasPermissions) {

if (hbRecorder.isBusyRecording()) {

hbRecorder.stopScreenRecording();

startbtn.setText(R.string.start_recording);

}

//else start recording

else {

startRecordingScreen();

}

}

} else {

showLongToast("This library requires API 21>");

}

});

}

@Override

public void HBRecorderOnStart() {

Log.e("HBRecorder", "HBRecorderOnStart called");

}

@Override

public void HBRecorderOnComplete() {

startbtn.setText(R.string.start_recording);

showLongToast("Saved Successfully");

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

if (hbRecorder.wasUriSet()) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.Q ) {

updateGalleryUri();

} else {

refreshGalleryFile();

}

}else{

refreshGalleryFile();

}

}

}

@Override

public void HBRecorderOnError(int errorCode, String reason) {

if (errorCode == SETTINGS_ERROR) {

showLongToast(getString(R.string.settings_not_supported_message));

} else if ( errorCode == MAX_FILE_SIZE_REACHED_ERROR) {

showLongToast(getString(R.string.max_file_size_reached_message));

} else {

showLongToast(getString(R.string.general_recording_error_message));

Log.e("HBRecorderOnError", reason);

}

startbtn.setText(R.string.start_recording);

}

@Override

public void HBRecorderOnPause() {

}

@Override

public void HBRecorderOnResume() {

}

@RequiresApi(api = Build.VERSION_CODES.LOLLIPOP)

private void refreshGalleryFile() {

MediaScannerConnection.scanFile(this,

new String[]{hbRecorder.getFilePath()}, null,

new MediaScannerConnection.OnScanCompletedListener() {

public void onScanCompleted(String path, Uri uri) {

Log.i("ExternalStorage", "Scanned " + path + ":");

Log.i("ExternalStorage", "-> uri=" + uri);

}

});

}

@RequiresApi(api = Build.VERSION_CODES.Q)

private void updateGalleryUri(){

contentValues.clear();

contentValues.put(MediaStore.Video.Media.IS_PENDING, 0);

getContentResolver().update(mUri, contentValues, null, null);

}

@RequiresApi(api = Build.VERSION_CODES.LOLLIPOP)

private void startRecordingScreen() {

quickSettings();

MediaProjectionManager mediaProjectionManager = (MediaProjectionManager) getSystemService(Context.MEDIA_PROJECTION_SERVICE);

Intent permissionIntent = mediaProjectionManager != null ? mediaProjectionManager.createScreenCaptureIntent() : null;

startActivityForResult(permissionIntent, SCREEN_RECORD_REQUEST_CODE);

startbtn.setText(R.string.stop_recording);

}

@RequiresApi(api = Build.VERSION_CODES.LOLLIPOP)

private void quickSettings() {

hbRecorder.setAudioBitrate(128000);

hbRecorder.setAudioSamplingRate(44100);

hbRecorder.recordHDVideo(true);

hbRecorder.isAudioEnabled(true);

hbRecorder.setNotificationSmallIcon(R.drawable.icon);

hbRecorder.setNotificationTitle(getString(R.string.stop_recording_notification_title));

hbRecorder.setNotificationDescription(getString(R.string.stop_recording_notification_message));

}

private boolean checkSelfPermission(String permission, int requestCode) {

if (ContextCompat.checkSelfPermission(this, permission) != PackageManager.PERMISSION_GRANTED) {

ActivityCompat.requestPermissions(this, new String[]{permission}, requestCode);

return false;

}

return true;

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

switch (requestCode) {

case PERMISSION_REQ_POST_NOTIFICATIONS:

if (grantResults[0] == PackageManager.PERMISSION_GRANTED) {

checkSelfPermission(Manifest.permission.RECORD_AUDIO, PERMISSION_REQ_ID_RECORD_AUDIO);

} else {

hasPermissions = false;

showLongToast("No permission for " + Manifest.permission.POST_NOTIFICATIONS);

}

break;

case PERMISSION_REQ_ID_RECORD_AUDIO:

if (grantResults[0] == PackageManager.PERMISSION_GRANTED) {

checkSelfPermission(Manifest.permission.WRITE_EXTERNAL_STORAGE, PERMISSION_REQ_ID_WRITE_EXTERNAL_STORAGE);

} else {

hasPermissions = false;

showLongToast("No permission for " + Manifest.permission.RECORD_AUDIO);

}

break;

case PERMISSION_REQ_ID_WRITE_EXTERNAL_STORAGE:

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.Q) {

hasPermissions = true;

startRecordingScreen();

} else {

if (grantResults[0] == PackageManager.PERMISSION_GRANTED) {

hasPermissions = true;

//Permissions was provided

//Start screen recording

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

startRecordingScreen();

}

} else {

hasPermissions = false;

showLongToast("No permission for " + Manifest.permission.WRITE_EXTERNAL_STORAGE);

}

}

break;

default:

break;

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

if (requestCode == SCREEN_RECORD_REQUEST_CODE) {

if (resultCode == RESULT_OK) {

setOutputPath();

hbRecorder.startScreenRecording(data, resultCode);

}

}

}

}

ContentResolver resolver;

ContentValues contentValues;

Uri mUri;

@RequiresApi(api = Build.VERSION_CODES.LOLLIPOP)

private void setOutputPath() {

String filename = generateFileName();

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.Q) {

resolver = getContentResolver();

contentValues = new ContentValues();

contentValues.put(MediaStore.Video.Media.RELATIVE_PATH, "Movies/" + "HBRecorder");

contentValues.put(MediaStore.Video.Media.TITLE, filename);

contentValues.put(MediaStore.MediaColumns.DISPLAY_NAME, filename);

contentValues.put(MediaStore.MediaColumns.MIME_TYPE, "video/mp4");

mUri = resolver.insert(MediaStore.Video.Media.EXTERNAL_CONTENT_URI, contentValues);

hbRecorder.setFileName(filename);

hbRecorder.setOutputUri(mUri);

} else {

createFolder();

hbRecorder.setOutputPath(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_MOVIES) +"/HBRecorder");

}

}

private String generateFileName() {

SimpleDateFormat formatter = new SimpleDateFormat("yyyy-MM-dd-HH-mm-ss", Locale.getDefault());

Date curDate = new Date(System.currentTimeMillis());

return formatter.format(curDate).replace(" ", "");

}

private void showLongToast(final String msg) {

Toast.makeText(getApplicationContext(), msg, Toast.LENGTH_LONG).show();

}

}